SYCL on Intel GPUs: GROMACS Performance Insights

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Research Poster

Bioinformatics and Life SciencesParallel Programming Languages

Information

Poster is on display and will be presented at the poster pitch session.

Molecular dynamics (MD) simulations have long been a staple of high-performance computing. MD algorithms iteratively solve Newton's equations of motion to model complex systems, typically containing hundreds of thousands of particles. The computation of inter-particle forces is a natural fit to the massively-parallel architecture of the GPUs, placing MD among the earliest adopters of general-purpose GPUs.

The iterative nature of the algorithm makes it latency sensitive. A typical time step for full-atom biophysical simulations is a few femtoseconds, while the biological processes of interest happen at scale of milliseconds or longer. As computational performance has increased while data-transfer performance and the sizes of the modelled systems remained relatively constant, the performance bottleneck has started to shift from floating-point intensity to latency, requiring changes in algorithms and the underlying heterogeneous offload runtimes.

GROMACS is a widely used MD package, supporting a wide range of hardware and software platforms, from laptops to largest supercomputers. It uses a flexible, multi-level parallelization scheme, utilizing MPI for inter-node parallelism, OpenMP for intra-rank multithreading, and SIMD for low-latency, high-throughput operations on the CPU. As many other MD packages, GROMACS has been among the early adopters of GPU acceleration. While the development was focused on CUDA for many years, now the hardware landscape is more diverse, with the first exascale systems using Intel and AMD GPUs in production.

Diverse hardware architecture makes programming heterogeneous systems challenging, as both the vendor-specific programming models and differences in low-level accelerator architecture need to be dealt with. The limited OpenCL support from hardware vendors - including lack of GPU-aware MPI and high-performance math libraries - necessitated a switch to a new backend. This led to the adoption of SYCL, an open standard maintained by the Khronos Group, which provides a portable, vendor-neutral programming model.

SYCL builds on top of the modern C++17 programming language, which is used in GROMACS, and allows developers to write single-source programs targeting a wide range of devices, such as CPUs, GPUs, FPGAs, and other specialized accelerators. Two major SYCL implementations, Intel oneAPI DPC++ and AdaptiveCpp, support GPUs from all three major vendors. The use of a single codebase for multiple target devices promises to simplify the development and maintenance of HPC applications.

Since version 2023, GROMACS has used SYCL as the primary means of targeting AMD and Intel GPUs. On AMD MI250X-based platforms, GROMACS was performing efficiently and scaling to hundreds of nodes using its SYCL backend.

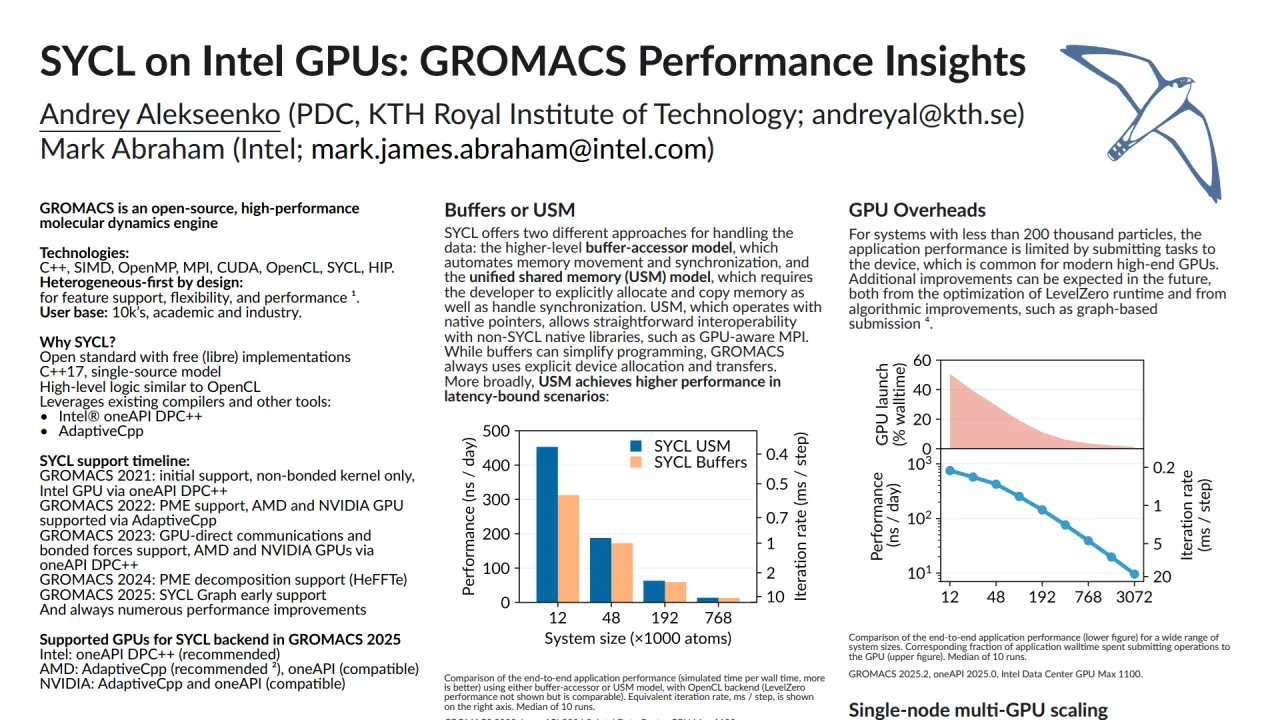

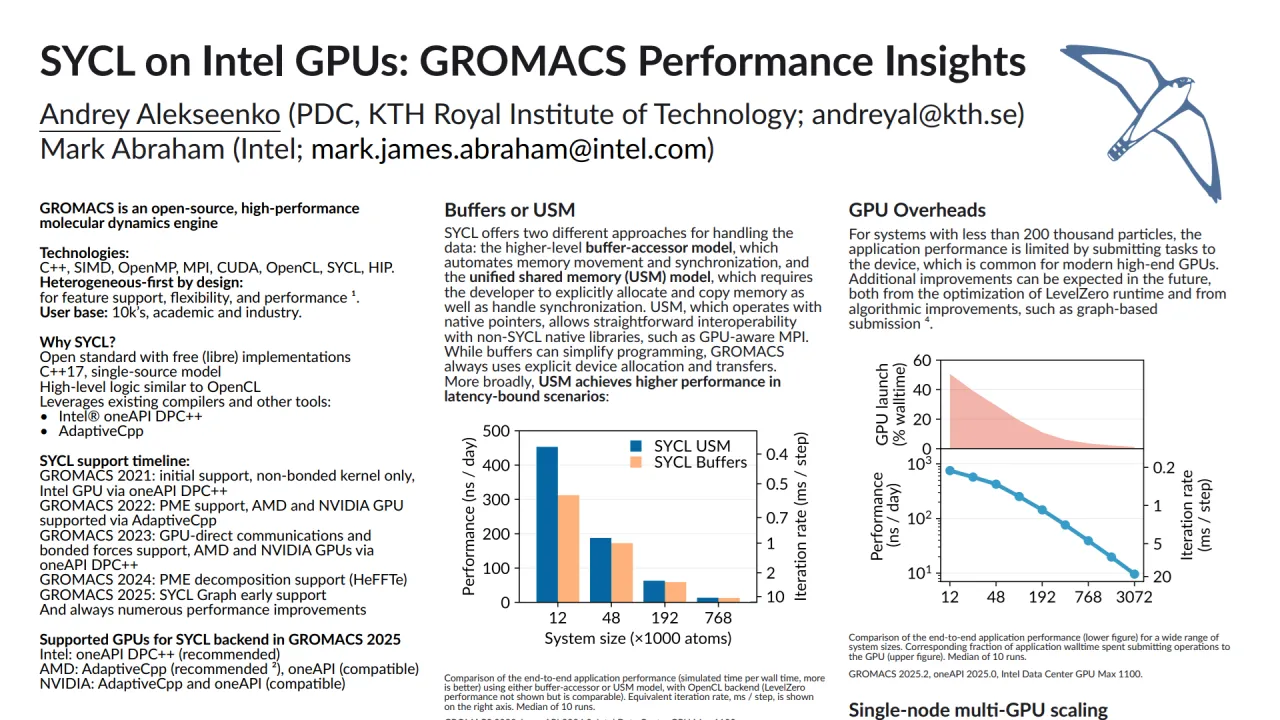

In this poster, we present the current state of the SYCL backend in GROMACS on Intel Data Center GPU Max. We measure the effect of host-side scheduling overheads, and how it translates to the overall application performance, characterize the effect of SYCL data management model (buffers or USM) and different tunables of the oneAPI software stack, and show the multi-node strong-scaling performance on SuperMUC-NG Phase 2.

Contributors:

Molecular dynamics (MD) simulations have long been a staple of high-performance computing. MD algorithms iteratively solve Newton's equations of motion to model complex systems, typically containing hundreds of thousands of particles. The computation of inter-particle forces is a natural fit to the massively-parallel architecture of the GPUs, placing MD among the earliest adopters of general-purpose GPUs.

The iterative nature of the algorithm makes it latency sensitive. A typical time step for full-atom biophysical simulations is a few femtoseconds, while the biological processes of interest happen at scale of milliseconds or longer. As computational performance has increased while data-transfer performance and the sizes of the modelled systems remained relatively constant, the performance bottleneck has started to shift from floating-point intensity to latency, requiring changes in algorithms and the underlying heterogeneous offload runtimes.

GROMACS is a widely used MD package, supporting a wide range of hardware and software platforms, from laptops to largest supercomputers. It uses a flexible, multi-level parallelization scheme, utilizing MPI for inter-node parallelism, OpenMP for intra-rank multithreading, and SIMD for low-latency, high-throughput operations on the CPU. As many other MD packages, GROMACS has been among the early adopters of GPU acceleration. While the development was focused on CUDA for many years, now the hardware landscape is more diverse, with the first exascale systems using Intel and AMD GPUs in production.

Diverse hardware architecture makes programming heterogeneous systems challenging, as both the vendor-specific programming models and differences in low-level accelerator architecture need to be dealt with. The limited OpenCL support from hardware vendors - including lack of GPU-aware MPI and high-performance math libraries - necessitated a switch to a new backend. This led to the adoption of SYCL, an open standard maintained by the Khronos Group, which provides a portable, vendor-neutral programming model.

SYCL builds on top of the modern C++17 programming language, which is used in GROMACS, and allows developers to write single-source programs targeting a wide range of devices, such as CPUs, GPUs, FPGAs, and other specialized accelerators. Two major SYCL implementations, Intel oneAPI DPC++ and AdaptiveCpp, support GPUs from all three major vendors. The use of a single codebase for multiple target devices promises to simplify the development and maintenance of HPC applications.

Since version 2023, GROMACS has used SYCL as the primary means of targeting AMD and Intel GPUs. On AMD MI250X-based platforms, GROMACS was performing efficiently and scaling to hundreds of nodes using its SYCL backend.

In this poster, we present the current state of the SYCL backend in GROMACS on Intel Data Center GPU Max. We measure the effect of host-side scheduling overheads, and how it translates to the overall application performance, characterize the effect of SYCL data management model (buffers or USM) and different tunables of the oneAPI software stack, and show the multi-node strong-scaling performance on SuperMUC-NG Phase 2.

Contributors:

Format

On DemandOn Site

Speakers

Andrey Alekseenko

ResearcherKTH