Design and Implementation of a GPU-Aware MPI Collective Library for Intel GPUs

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Research Poster

Heterogeneous System ArchitecturesRuntime Systems for HPC

Information

Poster is on display and will be presented at the poster pitch session.

In the evolving landscape of high-performance computing (HPC) and deep learning (DL), GPUs are crucial for delivering the computational power needed for data-intensive applications. As GPUs become integral to HPC and DL, efficient communication libraries are essential to optimize data movement across these accelerators. While Intel joined the GPU market later than competitors like NVIDIA and AMD, its Intel Data Center GPU Max series is now part of top-tier systems like Aurora, currently ranked second in the TOP500. Efficient MPI collective communication, particularly for Intel GPUs, is crucial for maximizing performance in these dense GPU systems.

The challenge is to develop high-performance MPI runtimes that efficiently manage both inter- and intra-node communication among GPUs. Intel’s technologies, including oneAPI and SYCL, facilitate bulk data transfers and memory synchronization across multiple GPUs via Intel X𝑒 links. These capabilities are leveraged by HPC applications like heFFTe for GPU-aware collectives and by DL applications for data-movement and reduction collectives to aggregate gradients. Thus, a robust MPI library optimized for Intel GPUs is essential.

Existing MPI libraries, such as Intel MPI and MPICH, have GPU-aware designs for Intel GPUs but remain inefficient, relying on point-to-point send/recv operations that introduce unnecessary overhead. Their reduction collectives offload device data to CPU buffers for reduction, further limiting performance. Efficient GPU-aware MPI collective runtimes for Intel GPUs are needed to address these shortcomings.

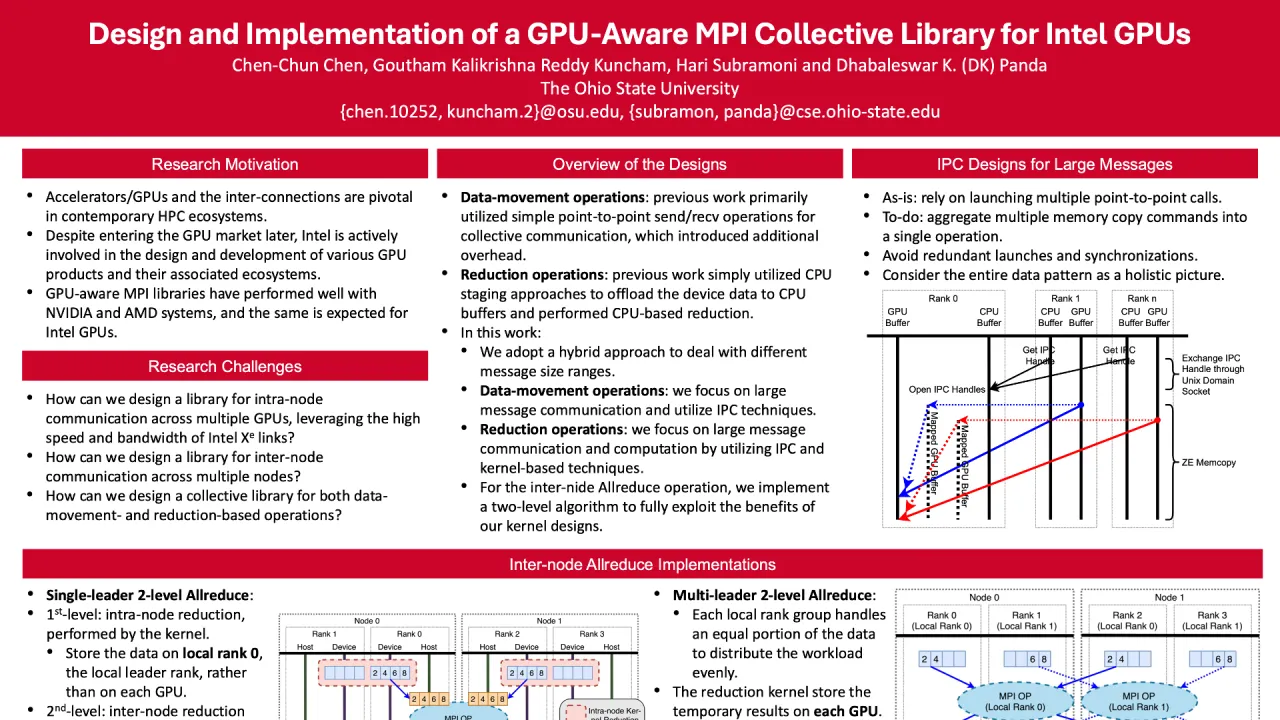

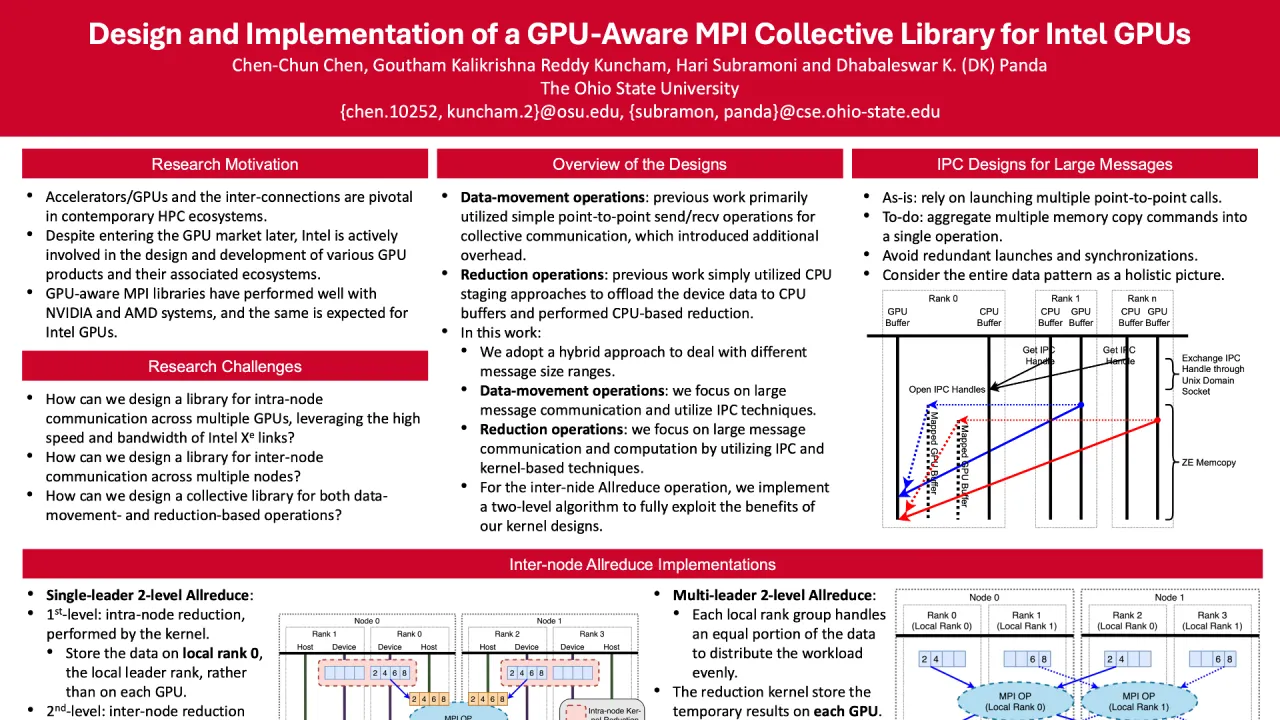

In this work, we propose hybrid and IPC-based designs for data movement and reduction collectives optimized for Intel GPUs. For small messages, we use CPU staging and optimize performance through comparisons with various memory copying mechanisms. For large messages, we aggregate multiple memory copy commands into a single list to reduce redundant launches and synchronizations, moving beyond basic send/recv pairs. For reduction collectives, we present a kernel-based reduction MPI library using IPC techniques to minimize data movement and efficient GPU kernels for computation and intra-node data transfer. Our focus is on optimizing the Allreduce operation due to its high communication traffic. We develop a unified reduction kernel and a specialized intra-node Allreduce kernel to address the broadcast step. Additionally, we implement a two-level algorithm for efficient inter-node Allreduce communication.

Our designs are evaluated through benchmark and application tests on various systems. On the Intel DevCloud, our Alltoall and Allgather implementations achieve a 100 µs improvement for large messages, while Bcast shows a 72x and Alltoallv a 100x performance gain compared to MPICH or Intel MPI at 32MB. Application tests show a 33x improvement in the heFFTe HPC application over MPICH. On ACES and Stampede3 systems, our Allreduce implementations deliver up to an 11x boost at 1GB with 8 GPUs and a 42% improvement with 32 GPUs. Application-level tests reveal up to 22% improvement for TensorFlow with Horovod and 28% for PyTorch with Horovod on 32 GPUs over Intel MPI.

Contributors:

In the evolving landscape of high-performance computing (HPC) and deep learning (DL), GPUs are crucial for delivering the computational power needed for data-intensive applications. As GPUs become integral to HPC and DL, efficient communication libraries are essential to optimize data movement across these accelerators. While Intel joined the GPU market later than competitors like NVIDIA and AMD, its Intel Data Center GPU Max series is now part of top-tier systems like Aurora, currently ranked second in the TOP500. Efficient MPI collective communication, particularly for Intel GPUs, is crucial for maximizing performance in these dense GPU systems.

The challenge is to develop high-performance MPI runtimes that efficiently manage both inter- and intra-node communication among GPUs. Intel’s technologies, including oneAPI and SYCL, facilitate bulk data transfers and memory synchronization across multiple GPUs via Intel X𝑒 links. These capabilities are leveraged by HPC applications like heFFTe for GPU-aware collectives and by DL applications for data-movement and reduction collectives to aggregate gradients. Thus, a robust MPI library optimized for Intel GPUs is essential.

Existing MPI libraries, such as Intel MPI and MPICH, have GPU-aware designs for Intel GPUs but remain inefficient, relying on point-to-point send/recv operations that introduce unnecessary overhead. Their reduction collectives offload device data to CPU buffers for reduction, further limiting performance. Efficient GPU-aware MPI collective runtimes for Intel GPUs are needed to address these shortcomings.

In this work, we propose hybrid and IPC-based designs for data movement and reduction collectives optimized for Intel GPUs. For small messages, we use CPU staging and optimize performance through comparisons with various memory copying mechanisms. For large messages, we aggregate multiple memory copy commands into a single list to reduce redundant launches and synchronizations, moving beyond basic send/recv pairs. For reduction collectives, we present a kernel-based reduction MPI library using IPC techniques to minimize data movement and efficient GPU kernels for computation and intra-node data transfer. Our focus is on optimizing the Allreduce operation due to its high communication traffic. We develop a unified reduction kernel and a specialized intra-node Allreduce kernel to address the broadcast step. Additionally, we implement a two-level algorithm for efficient inter-node Allreduce communication.

Our designs are evaluated through benchmark and application tests on various systems. On the Intel DevCloud, our Alltoall and Allgather implementations achieve a 100 µs improvement for large messages, while Bcast shows a 72x and Alltoallv a 100x performance gain compared to MPICH or Intel MPI at 32MB. Application tests show a 33x improvement in the heFFTe HPC application over MPICH. On ACES and Stampede3 systems, our Allreduce implementations deliver up to an 11x boost at 1GB with 8 GPUs and a 42% improvement with 32 GPUs. Application-level tests reveal up to 22% improvement for TensorFlow with Horovod and 28% for PyTorch with Horovod on 32 GPUs over Intel MPI.

Contributors:

Format

On DemandOn Site