Context Sensitivity in LLM-Driven Parallel Scientific Code Generation and Translation

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Research Poster

Compiler and Tools for Parallel ProgrammingLarge Language Models and Generative AI in HPC

Information

Poster is on display and will be presented at the poster pitch session.

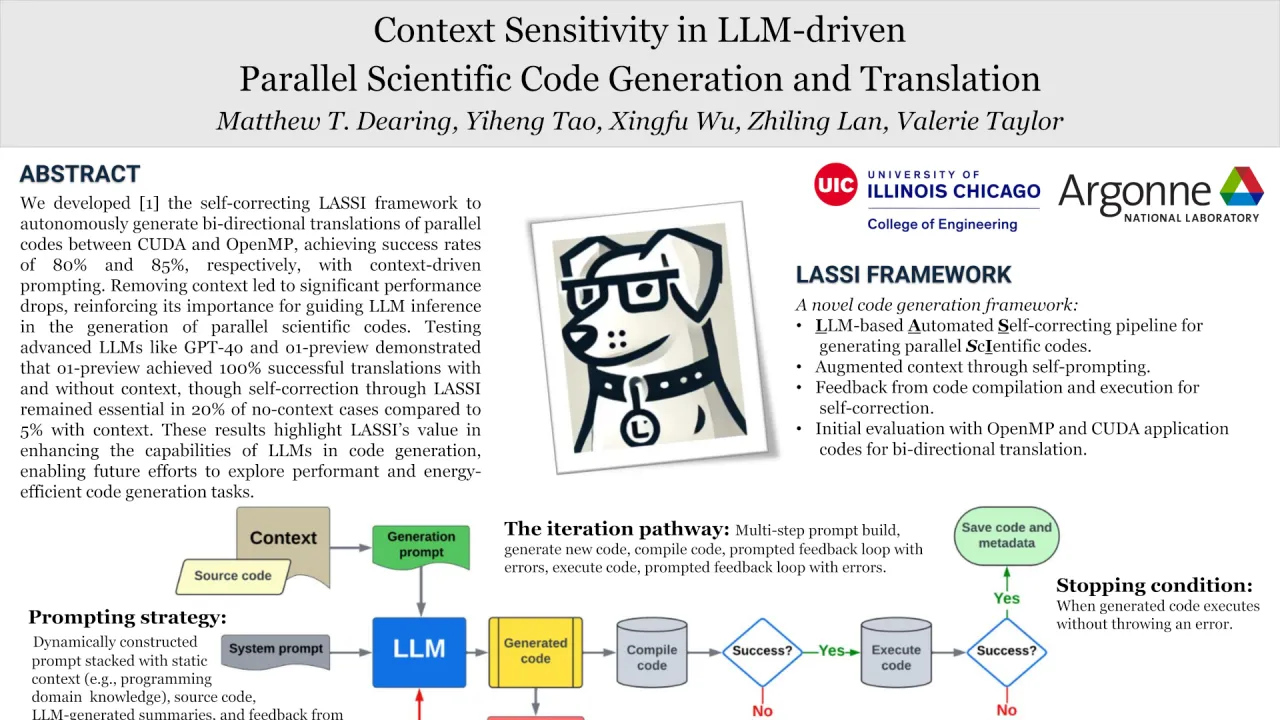

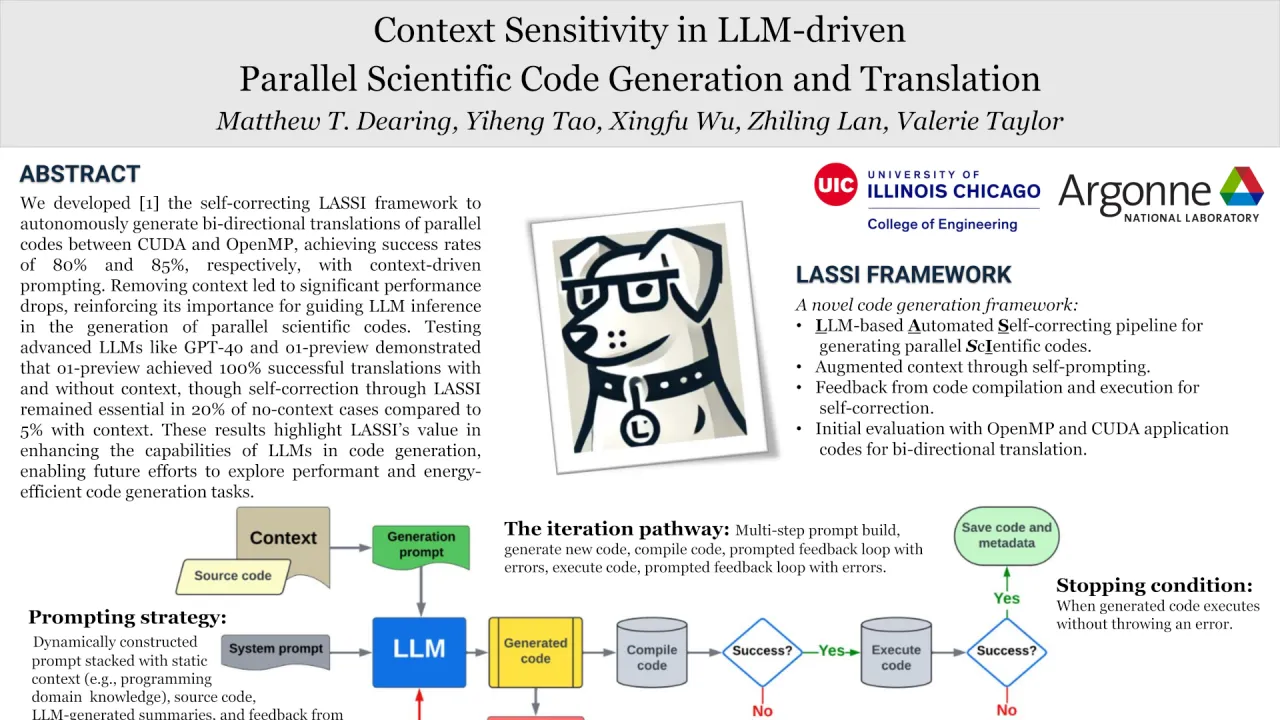

Following our introduction of the LASSI framework for autonomously generating translations of parallel codes, we investigate the sensitivity of large language models (LLMs) to the contextual information that guides its inference. Central to LASSI’s performance is the incorporation of self-correcting feedback loops that respond to the compilation and execution of generated code from a given LLM that is guided by targeted prompt engineering, self-prompting, and programming language-specific context. Using this context in the prompting strategy, LASSI demonstrated generating bi-directional translations of codes that execute with the same output as their source in 80% of our sampled OpenMP to CUDA translations and 85% of CUDA to OpenMP translations. While we expect context to impact LASSI directly, further investigation is necessary to understand its sensitivity for generating code. Removing the context in an otherwise identical experiment led to significant degradation in LASSI’s ability, with 45% of OpenMP to CUDA and 43% of CUDA to OpenMP translations providing executable code with the same output. This initial observation reinforces our expectation of how context supports LLM inference during code generation tasks.

These results demonstrated the capability improvements in multiple code-centric open-source and pre-“omni” OpenAI models. Therefore, we expanded on this work to test the latest LLMs, including GPT-4o and o1-preview. We found that both models achieved 100% successful code translations from OpenMP to CUDA and CUDA to OpenMP when context was provided through LASSI. Without context, both models achieved 100% successful translations from CUDA to OpenMP. GPT-4o generated 80% successful translations from OpenMP to CUDA, while o1-preview again achieved 100% successful translations. This outcome highlights the continued improvement of foundation model capabilities for this code generation task. However, additional support, such as our LASSI framework, remains necessary to achieve fully autonomous generation capabilities. We also anticipate that more complex and longer parallel code translations than explored here will benefit from LASSI. Additionally, our future efforts will explore the generation of performant and energy-efficient parallel codes.

Contributors:

Following our introduction of the LASSI framework for autonomously generating translations of parallel codes, we investigate the sensitivity of large language models (LLMs) to the contextual information that guides its inference. Central to LASSI’s performance is the incorporation of self-correcting feedback loops that respond to the compilation and execution of generated code from a given LLM that is guided by targeted prompt engineering, self-prompting, and programming language-specific context. Using this context in the prompting strategy, LASSI demonstrated generating bi-directional translations of codes that execute with the same output as their source in 80% of our sampled OpenMP to CUDA translations and 85% of CUDA to OpenMP translations. While we expect context to impact LASSI directly, further investigation is necessary to understand its sensitivity for generating code. Removing the context in an otherwise identical experiment led to significant degradation in LASSI’s ability, with 45% of OpenMP to CUDA and 43% of CUDA to OpenMP translations providing executable code with the same output. This initial observation reinforces our expectation of how context supports LLM inference during code generation tasks.

These results demonstrated the capability improvements in multiple code-centric open-source and pre-“omni” OpenAI models. Therefore, we expanded on this work to test the latest LLMs, including GPT-4o and o1-preview. We found that both models achieved 100% successful code translations from OpenMP to CUDA and CUDA to OpenMP when context was provided through LASSI. Without context, both models achieved 100% successful translations from CUDA to OpenMP. GPT-4o generated 80% successful translations from OpenMP to CUDA, while o1-preview again achieved 100% successful translations. This outcome highlights the continued improvement of foundation model capabilities for this code generation task. However, additional support, such as our LASSI framework, remains necessary to achieve fully autonomous generation capabilities. We also anticipate that more complex and longer parallel code translations than explored here will benefit from LASSI. Additionally, our future efforts will explore the generation of performant and energy-efficient parallel codes.

Contributors:

Format

On DemandOn Site