ChASE: An Application-Driven Eigensolver in the Era of Exascale Computing

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Research Poster

Extreme-scale AlgorithmsNovel AlgorithmsNumerical Libraries

Information

Poster is on display and will be presented at the poster pitch session.

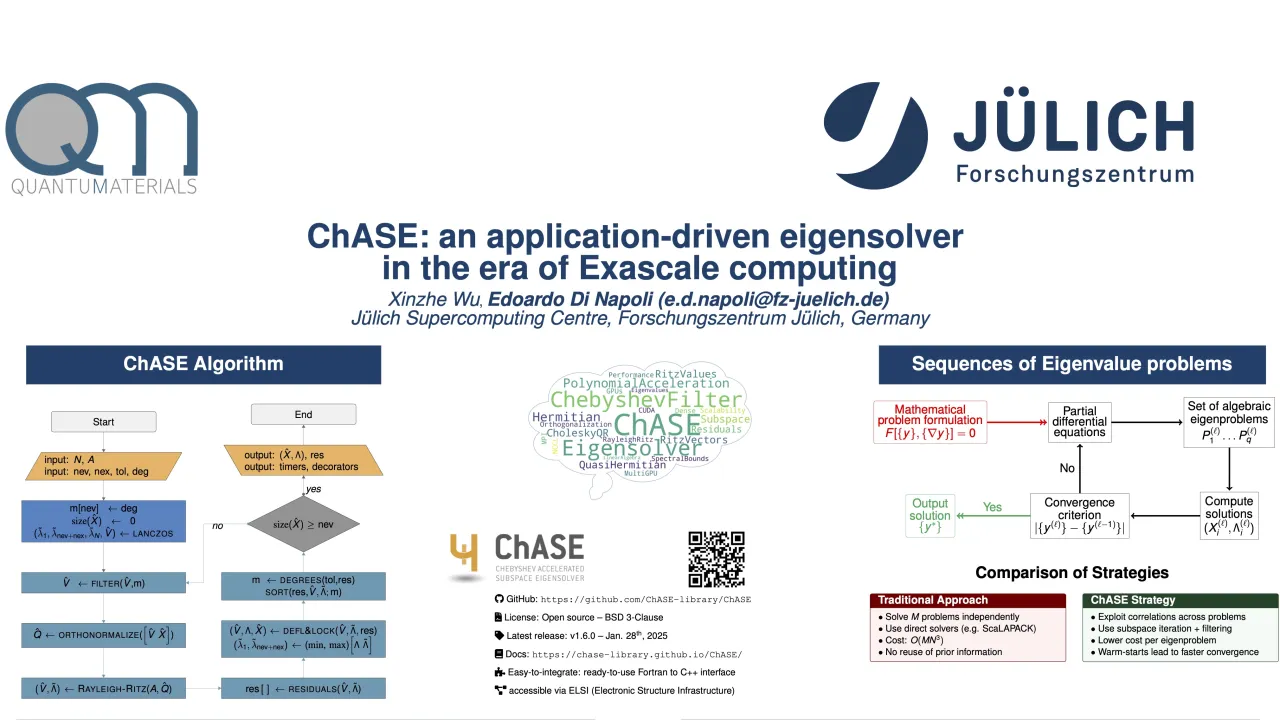

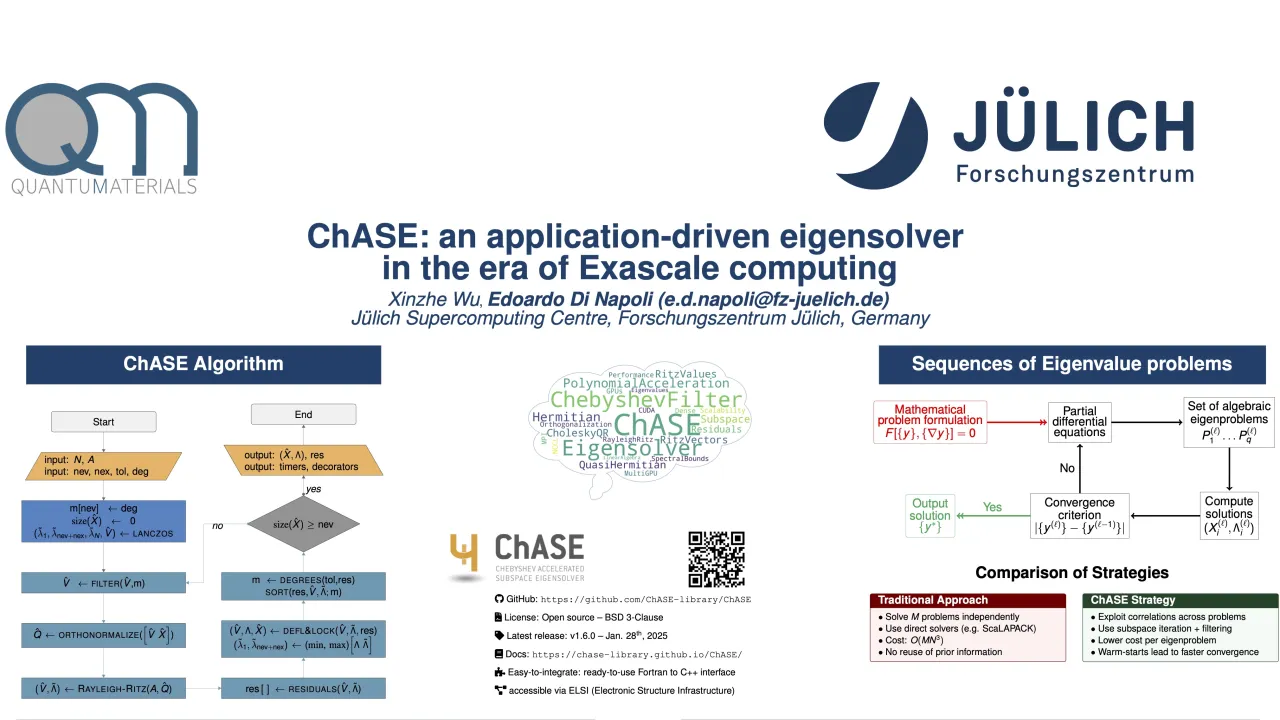

As supercomputing platforms evolve, traditional direct eigensolvers struggle to scale effectively due to memory constraints and communication bottlenecks. Lack of scaling becomes particularly acute when computing extremal eigenpairs for dense Hermitian matrices, which are essential in applications such as condensed matter physics and quantum chemistry. The Chebyshev Accelerated Subspace Eigensolver (ChASE) offers a scalable alternative by leveraging iterative methods and Chebyshev polynomial filters. However, earlier versions of ChASE faced limitations in distributed multi-GPU environments, including redundant computations, inefficient communication patterns, and excessive memory usage.

To overcome these challenges, we present a significantly enhanced ChASE implementation. First, we redesigned the parallelization scheme, distributing computational tasks like QR factorization and Rayleigh-Ritz projection across a 2D MPI grid. This reduces redundancy and optimizes memory usage. Second, communication-avoiding CholeskyQR algorithms were introduced, replacing traditional Householder QR factorization. Guided by accurate condition number estimation, these variants provide a balance between stability and efficiency. Third, NVIDIA’s NCCL library was integrated to replace MPI-based communication, enabling GPU-native collective operations and eliminating host-device data transfers.

Our optimized ChASE implementation scales to problem sizes up to N=10^6 and operates efficiently on JUWELS-Booster, a 900-node supercomputer with 3,600 NVIDIA A100 GPUs. Numerical tests demonstrate robust weak and strong scaling, with speedups exceeding 15× compared to previous versions. ChASE also outperforms state-of-the-art eigensolvers, such as ELPA, when computing up to 10% of a matrix’s eigenpairs.

This work showcases how algorithmic and hardware-driven optimizations enable ChASE to address large-scale eigenvalue computations on modern supercomputers. The enhanced library is poised to tackle emerging challenges in high-performance scientific computing, with ongoing efforts to extend support to AMD GPUs and next-generation platforms.

Contributors:

As supercomputing platforms evolve, traditional direct eigensolvers struggle to scale effectively due to memory constraints and communication bottlenecks. Lack of scaling becomes particularly acute when computing extremal eigenpairs for dense Hermitian matrices, which are essential in applications such as condensed matter physics and quantum chemistry. The Chebyshev Accelerated Subspace Eigensolver (ChASE) offers a scalable alternative by leveraging iterative methods and Chebyshev polynomial filters. However, earlier versions of ChASE faced limitations in distributed multi-GPU environments, including redundant computations, inefficient communication patterns, and excessive memory usage.

To overcome these challenges, we present a significantly enhanced ChASE implementation. First, we redesigned the parallelization scheme, distributing computational tasks like QR factorization and Rayleigh-Ritz projection across a 2D MPI grid. This reduces redundancy and optimizes memory usage. Second, communication-avoiding CholeskyQR algorithms were introduced, replacing traditional Householder QR factorization. Guided by accurate condition number estimation, these variants provide a balance between stability and efficiency. Third, NVIDIA’s NCCL library was integrated to replace MPI-based communication, enabling GPU-native collective operations and eliminating host-device data transfers.

Our optimized ChASE implementation scales to problem sizes up to N=10^6 and operates efficiently on JUWELS-Booster, a 900-node supercomputer with 3,600 NVIDIA A100 GPUs. Numerical tests demonstrate robust weak and strong scaling, with speedups exceeding 15× compared to previous versions. ChASE also outperforms state-of-the-art eigensolvers, such as ELPA, when computing up to 10% of a matrix’s eigenpairs.

This work showcases how algorithmic and hardware-driven optimizations enable ChASE to address large-scale eigenvalue computations on modern supercomputers. The enhanced library is poised to tackle emerging challenges in high-performance scientific computing, with ongoing efforts to extend support to AMD GPUs and next-generation platforms.

Contributors:

Format

On DemandOn Site