Motivated by Challenges: Harnessing AI to Revolutionize Resource Management and Workflow Management in High-Performance Computing

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Women in HPC Poster

ML Systems and ToolsPerformance MeasurementResource Management and SchedulingSystem and Performance Monitoring

Information

Poster is on display and will be presented at the poster pitch session.

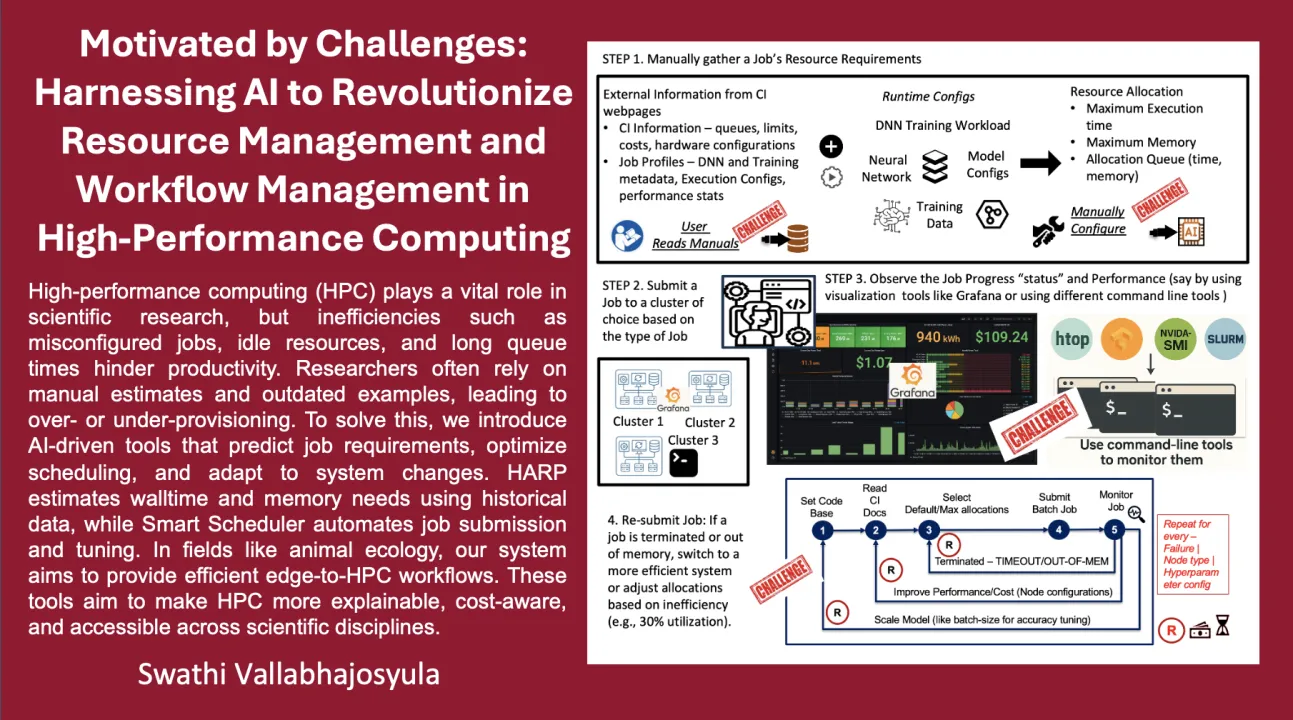

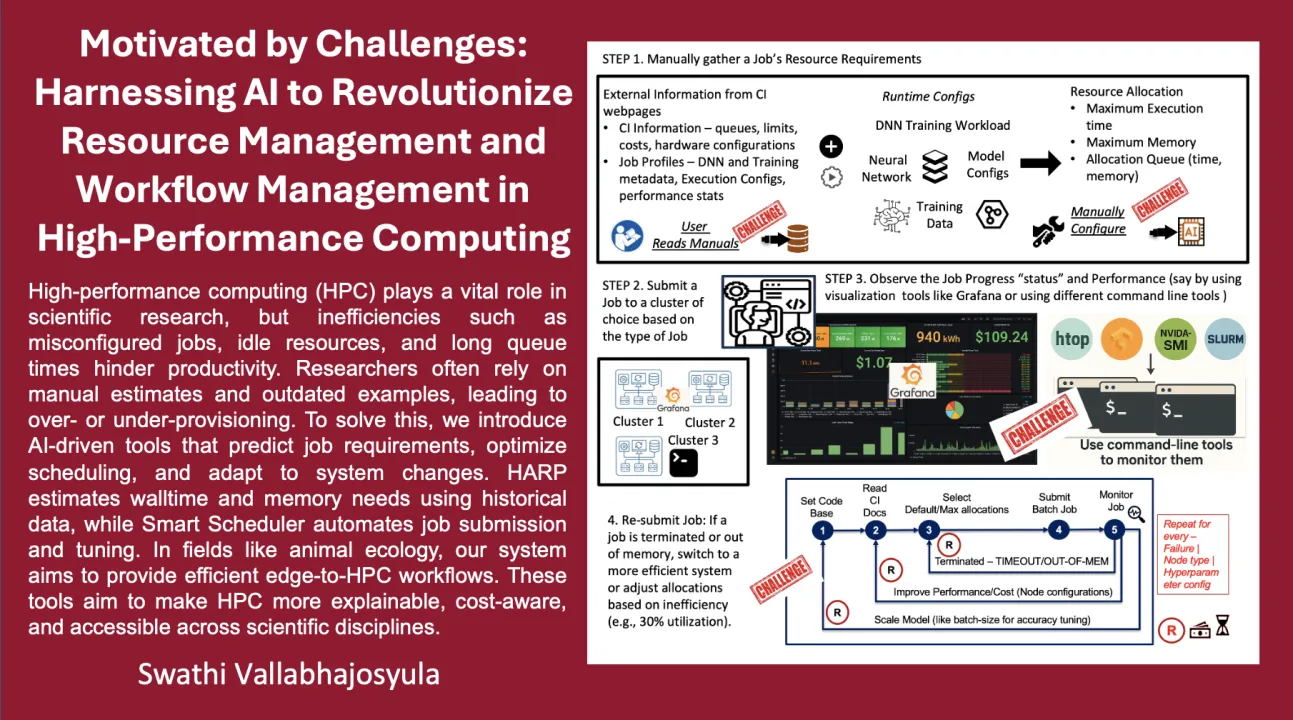

My fascination with the intersection of artificial intelligence (AI) and high-performance computing (HPC) began during my work on computationally intensive projects such as genome sequencing and machine learning workflows. These experiences revealed critical inefficiencies in HPC environments—specifically, in resource provisioning, job scheduling, and system utilization. Scientific progress was often delayed due to jobs being misconfigured, resources over-provisioned, or hardware underutilized. CPUs and GPUs frequently sat idle while jobs queued up unnecessarily, increasing energy consumption and operational costs. These problems are not unique to one field; they affect diverse domains including deep learning, climate modeling, and large-scale data analytics. This widespread inefficiency highlighted an urgent need for smarter, AI-driven resource management strategies.

Motivated by this challenge, my Ph.D. research focuses on building scalable, practical AI tools to optimize HPC usage. A core contribution is the development of the HPC Application Resource Predictor (HARP), a machine learning-based tool that estimates execution time and resource requirements based on system configuration, job type, and dataset size. HARP enables researchers to move beyond heuristic guesswork toward more accurate, data-informed allocation decisions. In tandem, I designed intelligent scheduling frameworks that automate job submission, monitoring, and dynamic rescheduling. These systems adapt in real-time to workload behavior and HPC resource availability, helping users avoid manual profiling and misallocation pitfalls.

My work also includes studying allocation patterns in shared supercomputing environments, where complex policies and user variability create additional burdens. To address this, I applied AI models to automate resource optimization across varying system constraints. One practical deployment is in animal ecology, where my framework supports an end-to-end AI workflow: edge devices collect sensor data, cloud platforms handle preprocessing, and HPC systems are used to retrain models or run centralized inference for tasks like data labeling. These workflows are designed to optimize both accuracy and resource cost, significantly reducing time-to-science and energy consumptions

Beyond technical development, my work also addresses a broader systemic challenge: the inequity in access to HPC and AI infrastructure, and the hesitation many researchers feel due to uncertainty about computational costs. This fear often becomes a barrier to fully leveraging AI and HPC for scientific advancement. During the NAIRR (National AI Research Resource) pilot workshops, many researchers emphasized the difficulty of estimating and justifying the cost of running AI workflows, particularly when applying for grants or compute allocations. NAIRR’s mission to democratize access to data and computing resources strongly aligns with my own goals. Looking ahead, my future work will focus on enhancing the explainability of AI-driven scheduling and prediction tools—not only to build trust in automated decisions but also to help researchers clearly understand the trade-offs between performance, cost, and efficiency. This will empower scientists to make informed, reproducible, and impactful decisions while enabling more equitable and sustainable access to AI-powered discovery.

My fascination with the intersection of artificial intelligence (AI) and high-performance computing (HPC) began during my work on computationally intensive projects such as genome sequencing and machine learning workflows. These experiences revealed critical inefficiencies in HPC environments—specifically, in resource provisioning, job scheduling, and system utilization. Scientific progress was often delayed due to jobs being misconfigured, resources over-provisioned, or hardware underutilized. CPUs and GPUs frequently sat idle while jobs queued up unnecessarily, increasing energy consumption and operational costs. These problems are not unique to one field; they affect diverse domains including deep learning, climate modeling, and large-scale data analytics. This widespread inefficiency highlighted an urgent need for smarter, AI-driven resource management strategies.

Motivated by this challenge, my Ph.D. research focuses on building scalable, practical AI tools to optimize HPC usage. A core contribution is the development of the HPC Application Resource Predictor (HARP), a machine learning-based tool that estimates execution time and resource requirements based on system configuration, job type, and dataset size. HARP enables researchers to move beyond heuristic guesswork toward more accurate, data-informed allocation decisions. In tandem, I designed intelligent scheduling frameworks that automate job submission, monitoring, and dynamic rescheduling. These systems adapt in real-time to workload behavior and HPC resource availability, helping users avoid manual profiling and misallocation pitfalls.

My work also includes studying allocation patterns in shared supercomputing environments, where complex policies and user variability create additional burdens. To address this, I applied AI models to automate resource optimization across varying system constraints. One practical deployment is in animal ecology, where my framework supports an end-to-end AI workflow: edge devices collect sensor data, cloud platforms handle preprocessing, and HPC systems are used to retrain models or run centralized inference for tasks like data labeling. These workflows are designed to optimize both accuracy and resource cost, significantly reducing time-to-science and energy consumptions

Beyond technical development, my work also addresses a broader systemic challenge: the inequity in access to HPC and AI infrastructure, and the hesitation many researchers feel due to uncertainty about computational costs. This fear often becomes a barrier to fully leveraging AI and HPC for scientific advancement. During the NAIRR (National AI Research Resource) pilot workshops, many researchers emphasized the difficulty of estimating and justifying the cost of running AI workflows, particularly when applying for grants or compute allocations. NAIRR’s mission to democratize access to data and computing resources strongly aligns with my own goals. Looking ahead, my future work will focus on enhancing the explainability of AI-driven scheduling and prediction tools—not only to build trust in automated decisions but also to help researchers clearly understand the trade-offs between performance, cost, and efficiency. This will empower scientists to make informed, reproducible, and impactful decisions while enabling more equitable and sustainable access to AI-powered discovery.

Format

On DemandOn Site