Modeling and Simulating Spiking Neurons and Synaptic Plasticity with NESTML on HPC

Tuesday, June 10, 2025 3:00 PM to Thursday, June 12, 2025 4:00 PM · 2 days 1 hr. (Europe/Berlin)

Foyer D-G - 2nd floor

Women in HPC Poster

Bioinformatics and Life Sciences

Information

Poster is on display and will be presented at the poster pitch session.

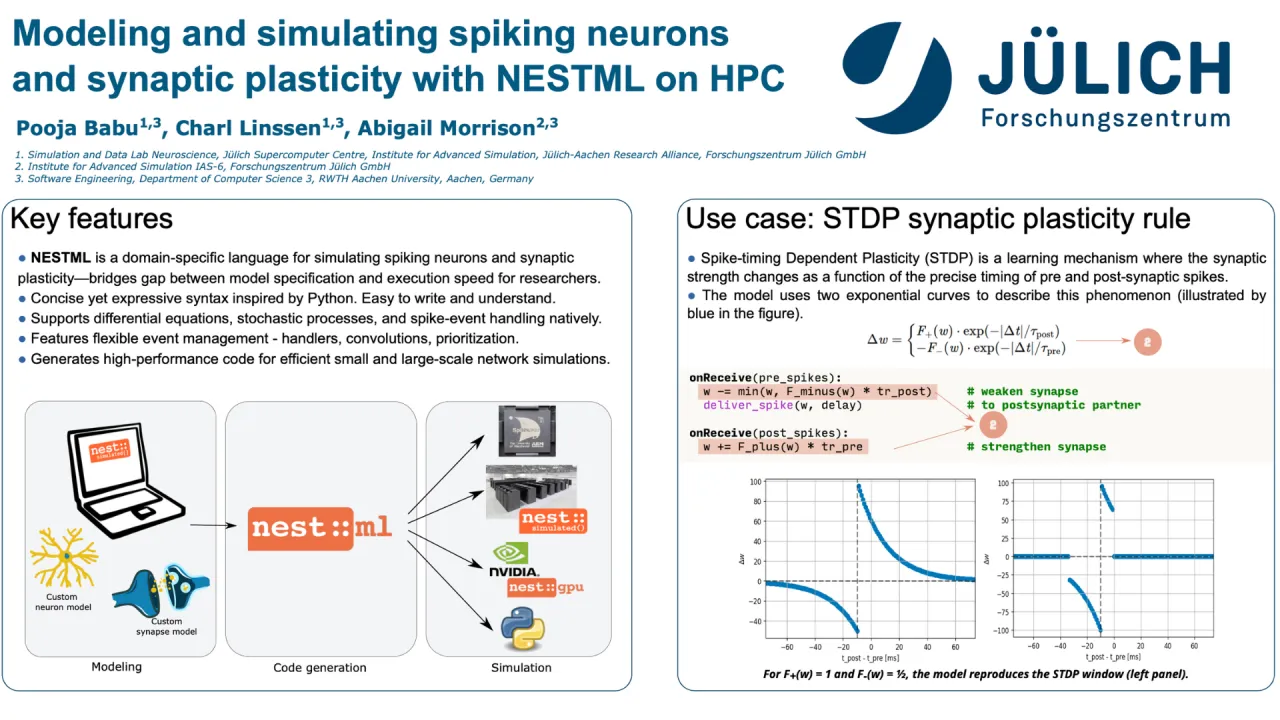

NESTML is a domain-specific modeling language for spiking neuronal networks incorporating synaptic plasticity [1]. It has been designed over the last 10 years to support researchers in computational neuroscience by allowing them to specify models of neurons and synapses in a precise and intuitive way. These models can subsequently be used in dynamical simulations of spiking neural networks (for potentially very large network sizes), employing high-performance simulation code generated by the NESTML toolchain. The code extends a simulation platform (such as NEST Simulator [2], NEST GPU [3], or SpiNNaker [4]) with new and easy-to-specify neuron and synapse models, formulated in NESTML. Combining a user-friendly modeling language with automated code generation makes large-scale neural network simulation accessible to neuroscience researchers without requiring any training in computer science [5].

NESTML features a concise yet expressive syntax, inspired by Python. There is direct language support for (spike) events, differential equations, convolutions, stochasticity, arbitrary algorithms using imperative programming concepts, and flexible event management using handler functions and prioritization. These features make models easier to write and maintain and make models in general more findable, accessible, interoperable, and reusable (‘FAIR’ principles).

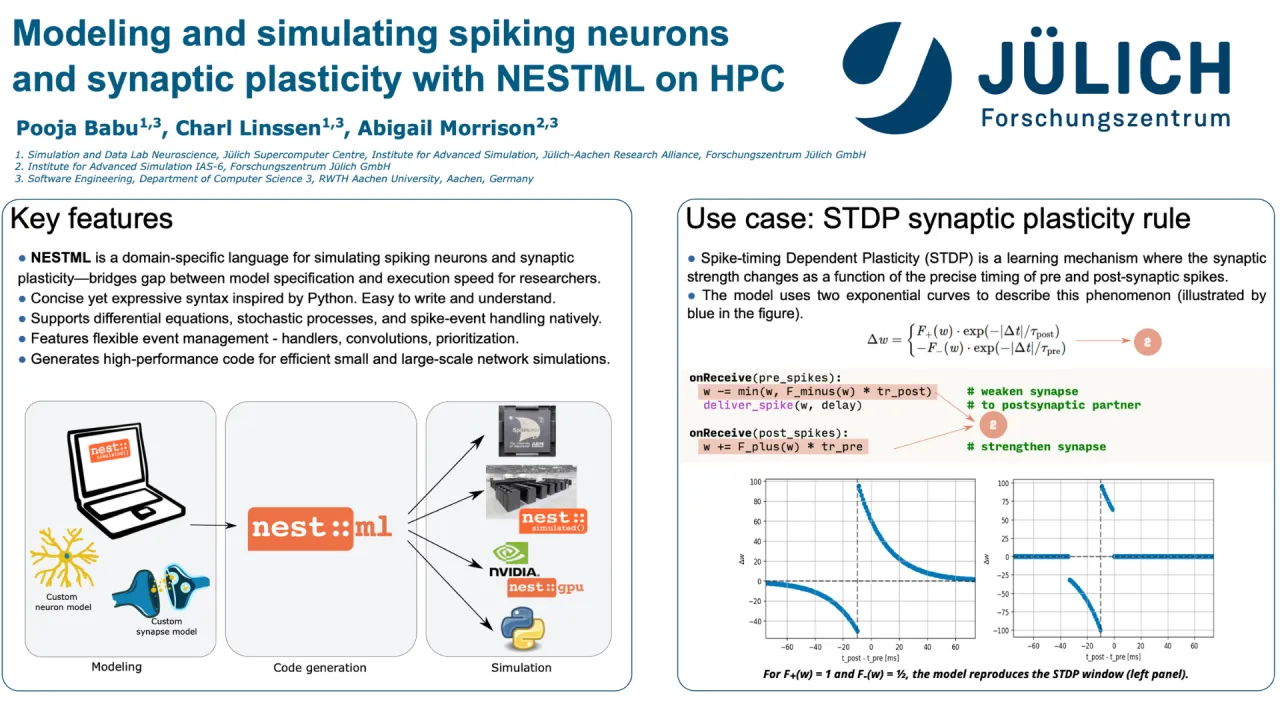

Models specified in the NESTML syntax are processed by an open-source toolchain that generates fast code for a given target simulator platform. Here we demonstrate the code generation approach of NESTML for the NEST simulator along with the performance and memory benchmarks for large-scale simulations. We achieve this by running a balanced random network of adaptive exponential (AdEx) integrate-and-fire neurons in combination with Spike-Timing Dependent Plasticity (STDP) synapses. The networks are simulated on a high-performance computing (HPC) cluster. We perform strong scaling and weak scaling experiments and assess the network's performance with NESTML-generated models, as compared to the NEST built-in models.

We compare the following combinations of neuron and synapse models: (i) NEST Simulator built-in neuron model + NEST Simulator built-in synapse model, (ii) NESTML neuron model + NEST Simulator built-in synapse model, (iii) NESTML neuron model + NESTML synapse model. We show that the NESTML-generated models perform as good as the handwritten models, with a small reduction in the performance (5% - 6%) and a slightly higher memory footprint (30%), specifically for the combination (iii), which can be attributed to the generic and model-agnostic process of code generation. We believe that this slight loss in performance is more than compensated for by the significant time savings achieved in writing and verifying the numerics of new models, as the use of NESTML allows the modeling process to be carried out in an agile and incremental manner, further speeding up the entire model development cycle. For the future, we focus on optimizations and improvements in the toolchain leading to performance gains that put the generated code on par or even above the NEST built-in models.

[1] https://nestml.readthedocs.io/

[2] Gewaltig & Diesmann, Scholarpedia 2(4), 2007

[3] Golosio et al., Frontiers in Computational Neuroscience, 2021

[4] Furber et al., Proceedings of the IEEE 102(5), 2014

[5] Blundell et al., Frontiers in Neuroinformatics 12, 2018

NESTML is a domain-specific modeling language for spiking neuronal networks incorporating synaptic plasticity [1]. It has been designed over the last 10 years to support researchers in computational neuroscience by allowing them to specify models of neurons and synapses in a precise and intuitive way. These models can subsequently be used in dynamical simulations of spiking neural networks (for potentially very large network sizes), employing high-performance simulation code generated by the NESTML toolchain. The code extends a simulation platform (such as NEST Simulator [2], NEST GPU [3], or SpiNNaker [4]) with new and easy-to-specify neuron and synapse models, formulated in NESTML. Combining a user-friendly modeling language with automated code generation makes large-scale neural network simulation accessible to neuroscience researchers without requiring any training in computer science [5].

NESTML features a concise yet expressive syntax, inspired by Python. There is direct language support for (spike) events, differential equations, convolutions, stochasticity, arbitrary algorithms using imperative programming concepts, and flexible event management using handler functions and prioritization. These features make models easier to write and maintain and make models in general more findable, accessible, interoperable, and reusable (‘FAIR’ principles).

Models specified in the NESTML syntax are processed by an open-source toolchain that generates fast code for a given target simulator platform. Here we demonstrate the code generation approach of NESTML for the NEST simulator along with the performance and memory benchmarks for large-scale simulations. We achieve this by running a balanced random network of adaptive exponential (AdEx) integrate-and-fire neurons in combination with Spike-Timing Dependent Plasticity (STDP) synapses. The networks are simulated on a high-performance computing (HPC) cluster. We perform strong scaling and weak scaling experiments and assess the network's performance with NESTML-generated models, as compared to the NEST built-in models.

We compare the following combinations of neuron and synapse models: (i) NEST Simulator built-in neuron model + NEST Simulator built-in synapse model, (ii) NESTML neuron model + NEST Simulator built-in synapse model, (iii) NESTML neuron model + NESTML synapse model. We show that the NESTML-generated models perform as good as the handwritten models, with a small reduction in the performance (5% - 6%) and a slightly higher memory footprint (30%), specifically for the combination (iii), which can be attributed to the generic and model-agnostic process of code generation. We believe that this slight loss in performance is more than compensated for by the significant time savings achieved in writing and verifying the numerics of new models, as the use of NESTML allows the modeling process to be carried out in an agile and incremental manner, further speeding up the entire model development cycle. For the future, we focus on optimizations and improvements in the toolchain leading to performance gains that put the generated code on par or even above the NEST built-in models.

[1] https://nestml.readthedocs.io/

[2] Gewaltig & Diesmann, Scholarpedia 2(4), 2007

[3] Golosio et al., Frontiers in Computational Neuroscience, 2021

[4] Furber et al., Proceedings of the IEEE 102(5), 2014

[5] Blundell et al., Frontiers in Neuroinformatics 12, 2018

Format

On DemandOn Site